When Artificial Intelligence Gets It Wrong

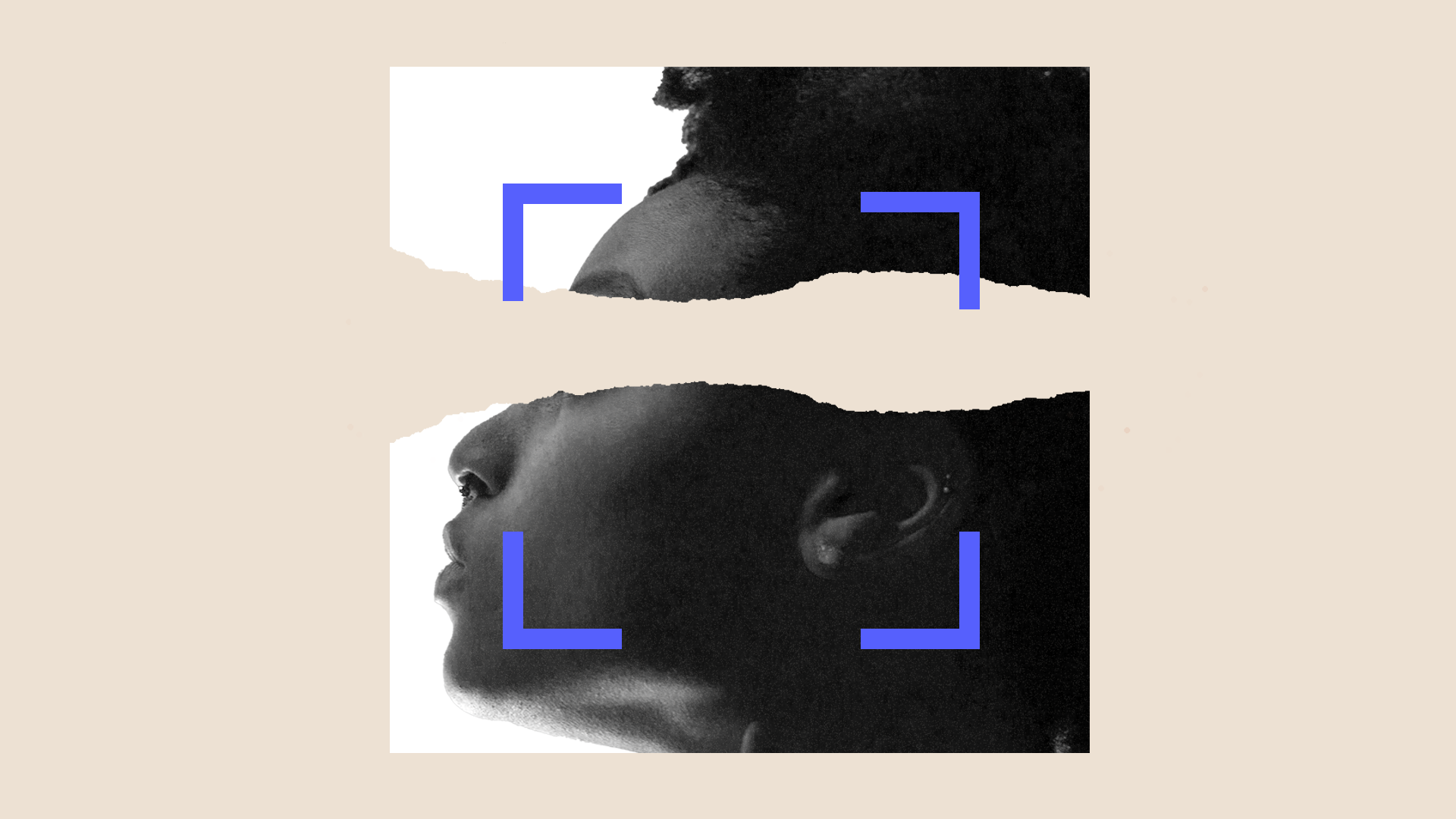

Unregulated and untested AI technologies have put innocent people at risk of being wrongly convicted.

09.19.23 By Christina Swarns

Porcha Woodruff was eight months pregnant when she was arrested for carjacking. The Detroit police used facial recognition technology to run an image of the carjacking suspect through a mugshot database, and Ms. Woodruff’s photo was among those returned.

Ms. Woodruff, an aesthetician and nursing student who was preparing her two daughters for school, was shocked when officers told her that she was being arrested for a crime she did not commit. She was questioned over the course of 11 hours at the Detroit Detention Center.

A month later, the prosecutor dismissed the case against her based on insufficient evidence.

Ms. Woodruff’s ordeal demonstrates the very real risk that cutting-edge artificial intelligence-based technology — like the facial recognition software at issue in her case — presents to innocent people, especially when such technology is neither rigorously tested nor regulated before it is deployed.

The Real-world Implications of AI

Time and again, facial recognition technology gets it wrong, as it did in Ms. Woodruff’s case. Although its accuracy has improved over recent years, this technology still relies heavily on vast quantities of information that it is incapable of assessing for reliability. And, in many cases, that information is biased.

In 2016, Georgetown University’s Center on Privacy & Technology noted that at least 26 states allow police officers to run or request to have facial recognition searches run against their driver’s license and ID databases. Based on this figure, the center estimated that one in two American adults has their image stored in a law enforcement facial recognition network. Furthermore, given the disproportionate rate at which African Americans are subject to arrest, the center found that facial recognition systems that rely on mug shot databases are likely to include an equally disproportionate number of African Americans.

More disturbingly, facial recognition software is significantly less reliable for Black and Asian people, who, according to a study by the National Institute of Standards and Technology, were 10 to 100 times more likely to be misidentified than white people. The institute, along with other independent studies, found that the systems’ algorithms struggled to distinguish between facial structures and darker skin tones.

The use of such biased technology has had real-world consequences for innocent people throughout the country. To date, six people that we know of have reported being falsely accused of a crime following a facial recognition match — all six were Black. Three of those who were falsely accused in Detroit have filed lawsuits, one of which urges the city to gather more evidence in cases involving facial recognition searches and to end the “facial recognition to line-up pipeline.”

Former Detroit Police Chief James Craig acknowledged that if the city’s officers were to use facial recognition by itself, it would yield misidentifications “96% of the time.”

The Problem With Depending on AI

Even when an AI-powered technology is properly tested, the risks of a wrongful arrest and wrongful conviction remain and are exacerbated by these new tools.

That’s because when AI identifies a suspect, it can create a powerful, unconscious bias against the technology-identified person that hardens the focus of an investigation away from other suspects.

Indeed, such technology-induced tunnel vision has already had damaging ramifications.

For example, in 2021, Michael Williams was jailed in Chicago for the first-degree murder of Safarian Herring based on a ShotSpotter alert that police received. Although ShotSpotter purports to triangulate a gunshot’s location through an AI algorithm and a network of microphones, an investigation by the Associated Press found that the system is deeply statistically unreliable because it can frequently miss live gunfire or mistake other sounds for gunshots. Still, based on the alert and a noiseless security video that showed a car driving through an intersection, Mr. Williams was arrested and jailed for nearly a year even though police and prosecutors never established a motive explaining his alleged involvement, had no witnesses to the murder, and found no physical evidence tying him to the crime. According to a federal lawsuit later filed by Mr. Williams, investigators also ignored other leads, including reports that another person had previously attempted to shoot Mr. Herring. Mr. Williams spent nearly a year in jail before the case against him was dismissed.

Cases like Ms. Woodruff’s and Mr. Williams’ highlight the dangers of law enforcement’s overreliance on AI technology, including an unfounded belief that such technology is a fair and objective processor of data.

Absent comprehensive testing or oversight, the introduction of additional AI-driven technology will only increase the risk of wrongful conviction and may displace the effective policing strategies, such as community engagement and relationship-building, that we know can reduce wrongful arrests.

Addressing AI in the Criminal Legal System

We enter this fall with a number of significant victories under our belt — including 7 exonerations since the start of the year. Through the cases of people like Rosa Jimenez and Leonard Mack, we’ve leveraged significant advances in DNA technology and other sciences to free innocent people from prison.

We are committed to countering the harmful effects of emerging technologies, advocating for research on AI’s reliability and validity, and urging consideration of the ethical, legal, social and racial justice implications of its use.

We support a moratorium on the use of facial recognition technology in the criminal legal system until such time as research establishes its validity and impacted communities are given the opportunity to weigh in on the scope of its implementation.

We are pushing for more transparency around so-called “black box technologies” — technologies whose inner workings are hidden from users.

We believe that any law enforcement reliance on AI technology in a criminal case must be immediately disclosed to the defense and subjected to rigorous adversarial testing in the courtroom.

Building on President Biden’s executive order directing the National Academy of Sciences to study certain AI-based technologies that can lead to wrongful convictions, we are also collaborating with various partners to collect the necessary data to enact reforms.

And, finally, we encourage Congress to make explicit the ways in which it will regulate investigative technologies to protect personal data.

It is only through these efforts can we protect innocent people from further risk of wrongful conviction in today’s digital age.

With gratitude,

Christina Swarns

Executive Director, Innocence Project

The post When Artificial Intelligence Gets It Wrong appeared first on Innocence Project.

This content originally appeared on Innocence Project and was authored by Justin Chan.

Justin Chan | Radio Free (2023-09-19T15:09:43+00:00) When Artificial Intelligence Gets It Wrong. Retrieved from https://www.radiofree.org/2023/09/19/when-artificial-intelligence-gets-it-wrong/

Please log in to upload a file.

There are no updates yet.

Click the Upload button above to add an update.

Leave a Reply

Thank you for visiting us. You can learn more about how we consider cases here. Please avoid sharing any personal information in the comments below and join us in making this a hate-speech free and safe space for everyone.